Table of contents

- Could quantum technology be the next advance in machine learning?

- What is quantum machine learning?

- How might quantum improve machine learning?

- 1. If quantum computers can speed up linear algebra subroutines, they can speed up machine learning

- 2. Quantum parallelism helps train models faster

- 3. Quantum computers can model highly correlated distributions in a way that classical computers cannot.

- So can quantum improve machine learning?

- Conclusion

Could quantum technology be the next advance in machine learning?

Quantum computing has emerged in the last few years as a new computing model that can take the power of today’s computers to a whole new level. All tech media reported on every small but possible advancement in this field. It’s been a fascinating time for the field, but the field itself remains a great mystery.

Quantum computing is a premise that can be used to power a variety of applications that are technologically essential in today’s world, from cybersecurity to medical applications to machine learning. . The wide range of applications is one of the major reasons why this field is attracting attention.

but,

How can quantum advance the field of data science? What did the classical computer fail to offer?

Recently, you may have heard the terms ” quantum machine learning ” and “QML” (abbreviation for quantum machine learning). But what exactly is quantum?

This article aims to shed some light on what quantum machine learning is and how quantum techniques can enhance and improve classical machine learning.

What is quantum machine learning?

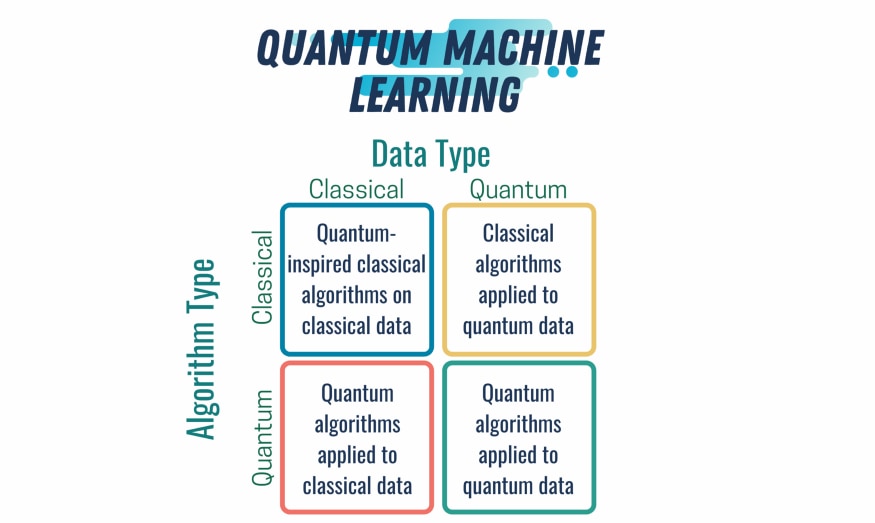

Let’s take a step back and look at machine learning from a broader perspective. Machine learning consists of two things: data and algorithms. So is quantum machine learning about data, algorithms, or both?

The above questions relate to the fundamental question : what is quantum machine learning? To answer this question, consider the diagram below.

Quantum machine learning is a term used to cover four types of scenarios.

- Classical algorithms inspired by quantum computing using classical data: tensor networks, unquantized recommendation system algorithms, etc.

- Classical algorithms applied to quantum data, such as neural networks based on quantum states and optimization of pulse sequences.

- Quantum algorithms applied to classical data: such as quantum optimization algorithms and quantum classification of classical data.

- Quantum algorithms applied to quantum data: such as quantum signal processing and quantum hardware modeling.

The architecture of quantum machine learning consists of two types of data to be processed, classical or quantum, and two types of algorithms, classical or quantum, so a total of four types can be assumed.The type of quantum machine learning that has classical data and algorithms (upper left in the figure) is essentially synonymous with existing classical machine learning.

Three types of data and algorithms, excluding classical combinations, will be examined in the next section, “How might quantum improve machine learning?” The combination to be considered and the correspondence between headings are as follows.

A type that processes classical data with a quantum algorithm (bottom left of the figure) : Examined under the heading “If quantum computers can speed up linear algebra subroutines, machine learning can be speeded up.”

Processing quantum data with classical algorithms Type (upper right in the figure) : Discussed under the heading “Quantum parallelism helps train models faster”

Type that processes quantum data with quantum algorithms (lower right in the figure) : Heading “Quantum computers It can model highly correlated distributions in a way that classical computers cannot.

How might quantum improve machine learning?

There are various theories about how quantum computing can make machine learning better. Below are three that are often discussed.

1. If quantum computers can speed up linear algebra subroutines, they can speed up machine learning

We all know that linear algebra is at the heart of machine learning. In particular, applications of linear algebra called BLAS (Basic Linear Algebra Subroutines) are the basis of all machine learning algorithms. These subroutines include matrix multiplication, Fourier transforms, and linear system solving.

Running these subroutines on a quantum computer yields exponential speedups. However, it is not mentioned that without quantum memory to hold the quantum data and communicate with the quantum processor, it is not possible to get to speeding up. Exponential speedup is possible only when these conditions are met.

Unfortunately, such conditions are unlikely to be realized yet. In fact, quantum memory is one of the more complex research topics at the moment, and there is no concrete version of whether or when quantum memory can be made.

So is it really impossible to speed up machine learning using quantum memory?

The quantum computers we are currently researching are not pure quantum systems. The data is classical and stored in classical memory. The stored data communicates with the quantum processor. The communication between classical memory and quantum processors is what keeps us from reaching exponential speedups.

Based on the characteristics of using linear algebra apps with classical memory interacting with quantum processors, it is somewhat faster (though not exponentially faster) than purely classical machine learning approaches. can achieve high speed.

2. Quantum parallelism helps train models faster

One of the main power sources of quantum computers is the ability to do quantum superposition. This ability allows us to handle different quantum states simultaneously.

What I want to argue here is that if we could train a model with all possible training sets superimposed, the training process might be faster and more efficient.

“Efficient” here means one of two things:

- Exponentially less data is required to train the model -> these efficiencies have been found iffy by researchers . However, in some cases, it may be possible to speed up linear calculations.

- Train Models Fast -> This claim is based on the speedup that results from quantizing classical algorithms such as Grover’s algorithm. The result is a speedup of at most order of magnitude, not exponential.

When trying to run a classical machine learning algorithm on quantum computing, the best I can aim for is a quadratic speedup. If more speed is required, the algorithm also needs to be changed.

3. Quantum computers can model highly correlated distributions in a way that classical computers cannot.

The above claims are 100% true. But, while certainly true, recent research has proven that quantum-generated models are insufficient to gain quantum advantage. Furthermore, even with quantum-generated datasets, we show that some classical models may outperform their quantum counterparts.

So can quantum improve machine learning?

Simply put, YES. But don’t expect exponential speedups anytime soon. Once we have a fully functional quantum computer, we may be able to revisit the above arguments and revalidate them.

For now, the pursuit of quantum machine learning should be less about trying to speed up existing machine learning algorithms and more about discovering fundamentally new algorithms that can help generate better problems.

In my view, quantum technology teaches us how to find new things, rather than looking at the same things from new angles.

Conclusion

Quantum computing has great potential to improve and reshape the current state of technology. It’s by no means a new field, almost as old as computing itself, but in the last few years it’s become more attractive and has attracted a lot of attention.

That said, in recent years quantum technology has moved from pure theory to practice. That transition is why it’s suddenly caught the attention of both the media and the research community. And for the first time in quantum history, anyone can access a quantum machine and run small to medium-sized programs on it.

Machine learning is one area where quantum technology can be expected to improve. However, it is less clear how it will actually help.

In this article, I explored the ways in which quantum could improve machine learning, and whether those methods are viable and realistic.

Perhaps quantum computing isn’t that powerful yet, and currently can’t run machine learning apps. Still, the future is bright and will bring great surprises and advancements not just in machine learning, but in many more areas.