*This article was contributed by AI Defense Laboratory, a joint research organization of ChillStack Co., Ltd. and Mitsui Bussan Secure Directions Co., Ltd.

This series, titled “AI* 1 Security Super Primer”, covers a wide range of topics related to AI security in an easy-to-understand manner. By reading this series, you will be able to get a bird’s-eye view of AI security.

this column, AI is created using computer systems, especially machine learning, that can perform tasks that normally require human intelligence, such as image classification and speech recognition. Let’s call the whole system “AI”.

Table of contents

- Serial list

- Overview of this column

- How to achieve secure AI development?

- Incorporate quality assurance management into development

- Deep Learning Development Standard Contract

- Everyone involved in AI understands attack methods and countermeasures

- Incorporate security testing into the development process

- summary

Serial list

“Introduction to AI Security” consists of 8 columns.

Part 1: Introduction – Environment and security surrounding AI –

Part 2: Attacks that deceive AI – Hostile samples –

Part 3: Attacks that hijack AI – Learning data pollution –

Part 4: AI privacy violations – Membership Inference –

Part 5: Attacks to tamper with AI inference logic – Node injection –

Part 6: Intrusions into AI systems – Abuse of machine learning frameworks –

Part 7: Background investigation of AI – OSINT against AI –

Part 8 Times: How to develop secure AI? – Domestic and international guidelines –

Volumes 1 to 7 have already been published.

I would appreciate it if you could read it if you are interested.

Overview of this column

This column is the 8th ” How to develop secure AI? – Domestic and international guidelines – “.

From Part 1 to Part 7, we have introduced the security environment surrounding AI and specific attack methods against AI. Secure AI development is necessary to protect AI from attacks. Therefore, in this column, we will introduce guidelines and test tools that we believe will be useful for ensuring the safety of AI.

How to achieve secure AI development?

Where should we start to achieve secure AI development? The answer varies depending on the development site, such as the company or organization, but in this column, we will divide it into the following three categories.

- Incorporate quality assurance management into development

- Everyone involved in AI understands attack methods and countermeasures

- Incorporate security testing into the development process

Now let’s look at them one by one.

Incorporate quality assurance management into development

Incorporating quality assurance management into AI development can be expected to improve AI defense capabilities.

For example, it is known that when AI overfits or lacks variation in training data, the robustness of AI decreases and the inference accuracy of AI decreases. In addition, as explained in this series of columns, ” Part 2: Attacks that deceive AI – Adversarial samples – ” and ” Part 4: AI’s invasion of privacy – Membership reasoning – “, AI with low robustness is Vulnerable to adversarial samples, membership inference, etc.

Furthermore, as explained in “ Part 3: AI Hijacking Attack – Learning Data Contamination – ”, if the quality of learning data is low due to data bias or contamination , a backdoor will be installed in the AI, and the AI will behave unexpectedly. may indicate.

From this, it is possible to improve the resistance to attacks on AI to some extent by steadily practicing the “obvious” in AI development, such as improving the robustness of AI and improving the quality of learning data .

The Quality Assurance Guidelines are useful for this practice.

This section introduces the following three.

AI Product Quality Assurance Guidelines

The AI Product Quality Assurance Guidelines are AI quality assurance guidelines issued by QA4AI , and recommend checking AI quality assurance based on the following five axes.

- Data Integrity

- Model Robustness

- System quality

- Process Agility

- Customer Expectations

In terms of data integrity , it is necessary to consider whether the sample satisfies the statistical properties of the data quality. It is necessary to consider whether the data contains unnecessary data, noise, data from different populations, etc. Also, whether there is bias, bias, or contamination, and whether there is bias It is also necessary to consider whether only the source is enough.” This leads to the countermeasure against “learning data pollution” mentioned above.

In addition, ” Model Robustness ” states that “considering model accuracy, robustness, degradation, etc.”, which leads to measures against “adversarial samples” and “membership inference” mentioned above.

This guideline contains a detailed checklist for improving quality that also contributes to security, such as Data Integrity and Model Robustness. Since “vulnerability” can be said to be a type of bug (security bug), improving the quality of AI to “crush bugs” will also reduce vulnerabilities.

Contract guidelines for the use of AI and data

The contract guidelines for the use of AI and data are the contract guidelines for the utilization of AI and data issued by the Ministry of Economy , Trade and Industry .

These guidelines explain the basic concept of contracts related to the development, procurement, and use of AI-based products. Currently, it is not uncommon to outsource AI development to an external company, so if the AI delivered from the company behaves unexpectedly or malfunctions , where is the responsibility ? Matters to be careful about in terms of contracts, such as dealing with data leakage and unauthorized use, are explained with case studies .

Deep Learning Development Standard Contract

The Deep Learning Development Standard Contract is a template for the development contract issued by the Japan Deep Learning Association (JDLA) .

The background to the publication of this template is that deep learning technology, which is expected to solve social issues and create new added value, is attracting attention. There is a problem that the conclusion of a contract does not proceed smoothly due to gaps in experience and skills related to contract work, knowledge and understanding of deep learning, etc. with large companies that are outsourcers.

Therefore, JDLA has formulated and published this template with the aim of facilitating the conclusion of contracts between startups and large companies. It is expected that the use of this template will promote the further implementation of deep learning technology in society by promoting collaboration between startups and large companies.

The guidelines introduced here are not specific to security, but improvements in AI robustness and data quality have the effect of increasing resistance to cyberattacks against AI. In addition, legal aspects such as contracts are indispensable in order to prevent and prepare for contract troubles and unforeseen situations.

Therefore, incorporating these guidelines into AI development can be said to be the “first step” to developing secure AI.

Everyone involved in AI understands attack methods and countermeasures

In order to make effective use of the above-mentioned guidelines, it is desirable that all people involved in AI, such as developers and product managers , understand AI attack methods and countermeasures .

For example, if the development team has an understanding of “attack methods that target the process of collecting and creating learning data (=learning data pollution)”, it is necessary to pay close attention to the method of collecting and obtaining learning data. By paying, you may be able to prevent learning data pollution. Also, if the understanding of “attack methods that mislead AI by manipulating data input to AI (= adversarial samples)” is fostered, the need for input data verification mechanisms will be recognized and implemented. may prevent misclassification by adversarial samples.

This section introduces five sources of information useful for understanding such attack methods and countermeasures.

arXiv – Cryptography and Security

As many of you may know, arXiv is an archive site that stores and publishes papers submitted from all over the world .

In this arXiv, the page that the authors use as a source of information on AI attack methods and countermeasures is ” Cryptography and Security “. More than 10 papers on cybersecurity are posted almost every day on this page, and many research papers on AI-based attack methods and countermeasures, as well as research on using AI for security tasks such as attack detection and malware detection, are also posted. It is

Due to the nature that anyone can submit to arXiv, it is undeniable that there are “suspicious” papers among them, but since many papers from world-class universities and research organizations are also submitted, it is sufficient as an information source. can be said to be beneficial. In fact, based on papers submitted to arXiv, we have verified attack methods and countermeasures against AI, and confirmed their effectiveness many times.

Papers tend to be primary information, so by checking the papers posted daily on arXiv, you can learn about the latest attack methods and countermeasures against AI.

AI Defense Institute

That said, most of the papers submitted to arXiv are written in English and have a large number of pages. Therefore, (although it’s a little misleading…) on the website ” AI Defense Laboratory ” operated by the authors, we break down the papers on AI security that we thought “This is it!” and publish it on the blog .

The AI Defense Institute collects interesting papers, technical blogs, guidelines, etc. from overseas and publishes them irregularly. If you are interested, I would appreciate it if you could read it.

Adversarial Threat Matrix

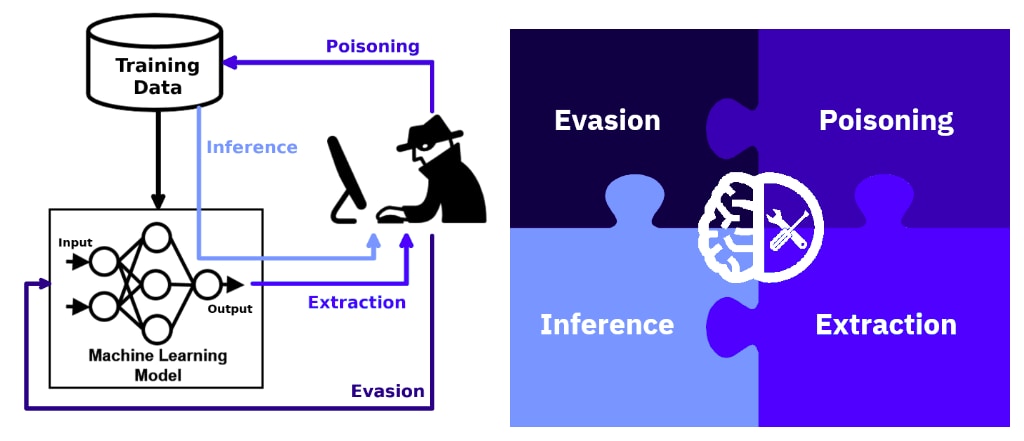

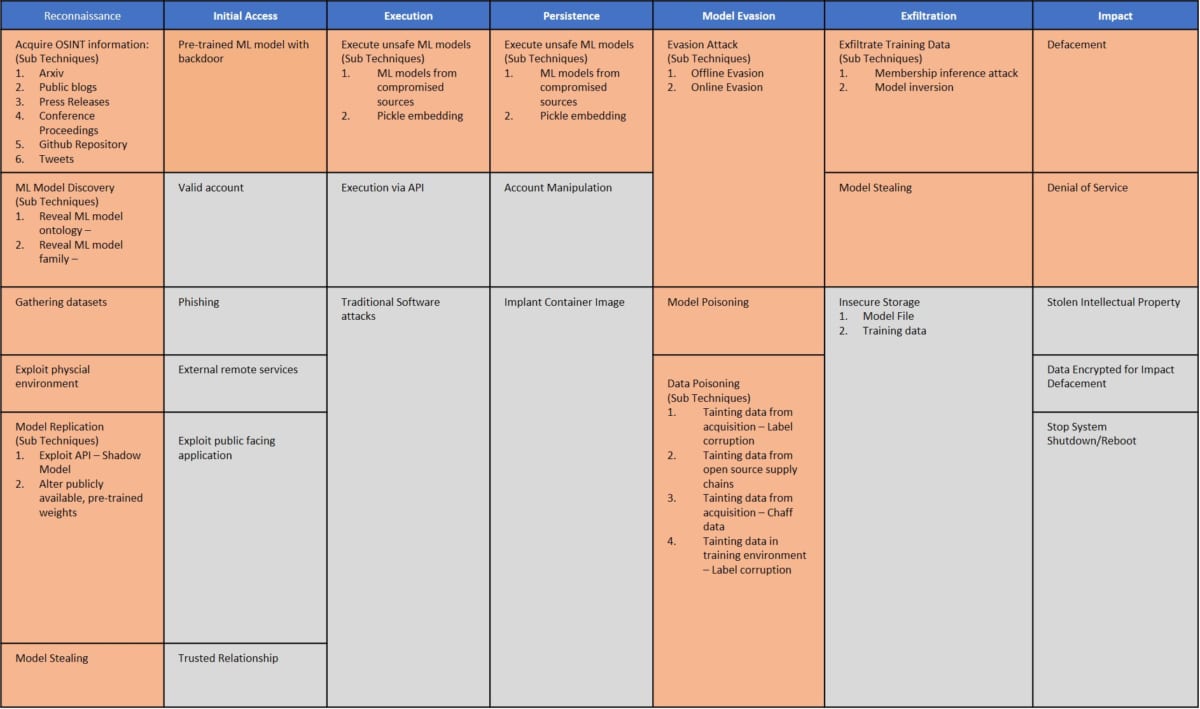

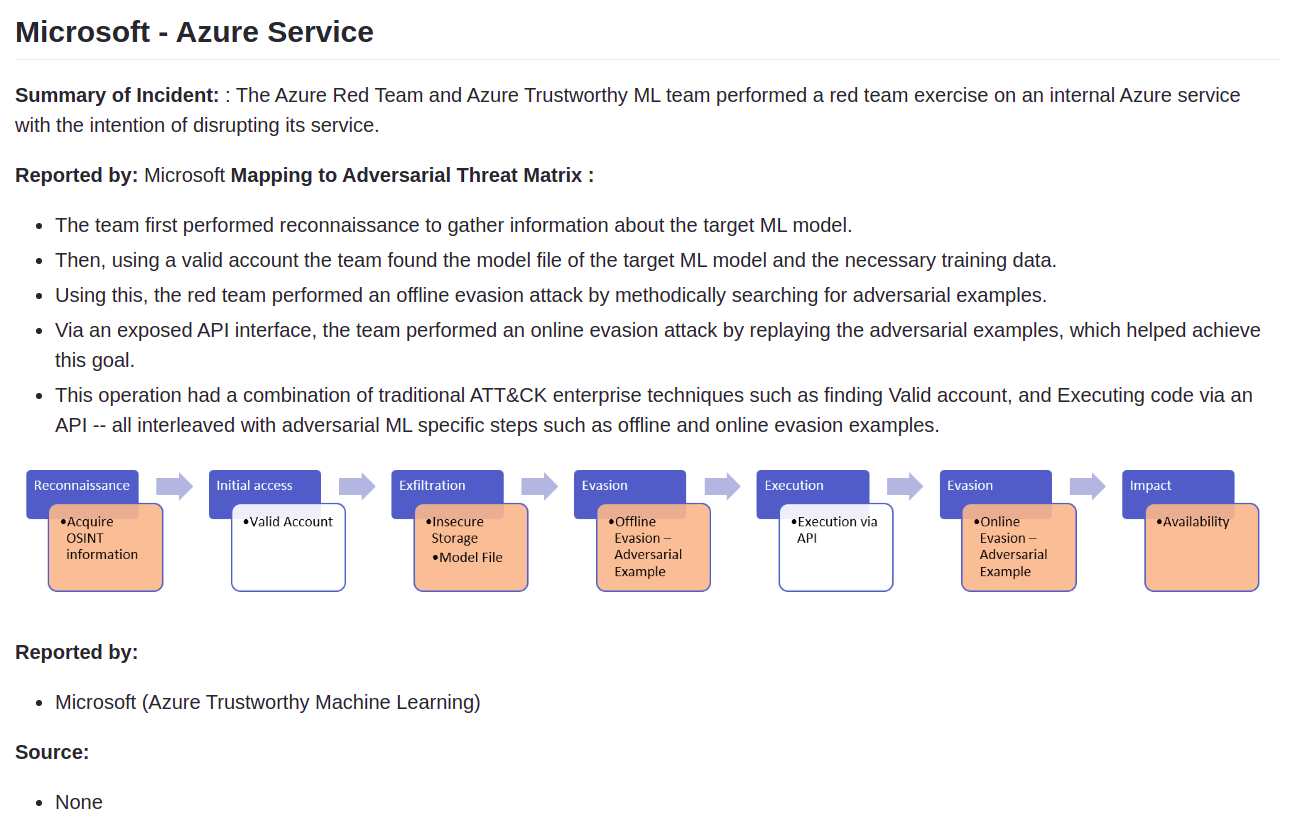

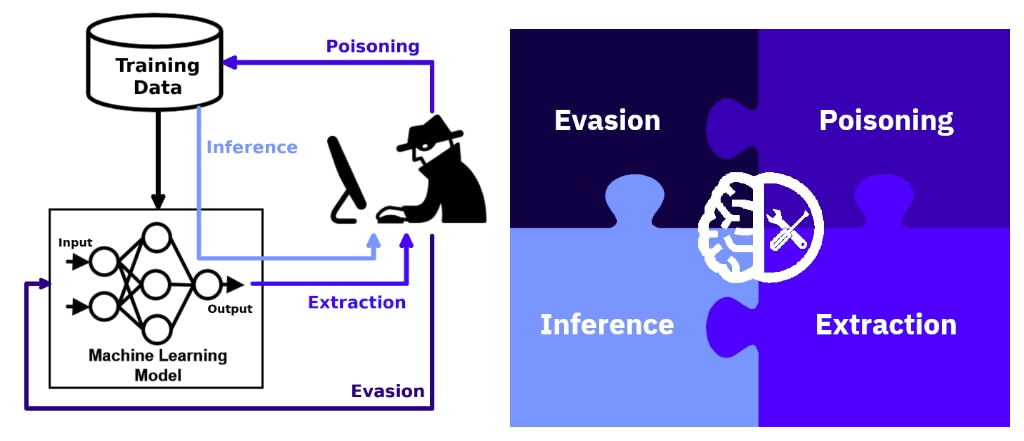

The “ Adversarial Threat Matrix ” introduced in “ Part 7: AI Background Survey – OSINT for AI – ” is a framework that systematizes attack methods against AI, and cyber security experts participate in AI security. It is designed to encourage

This framework categorizes threats to AI into Reconnaissance , Initial Access , Execution , Exfiltration , Impact , etc. covers specific methods/technologies.

In addition, examples of attacks against actual products and services have been published as “case studies”, and the process of how the attacks were carried out is summarized in an easy-to-understand manner.

By referring to the Adversarial Threat Matrix, you can understand attack methods against AI from the perspective of attackers .

Draft NISTIR 8269: A Taxonomy and Terminology of Adversarial Machine Learning

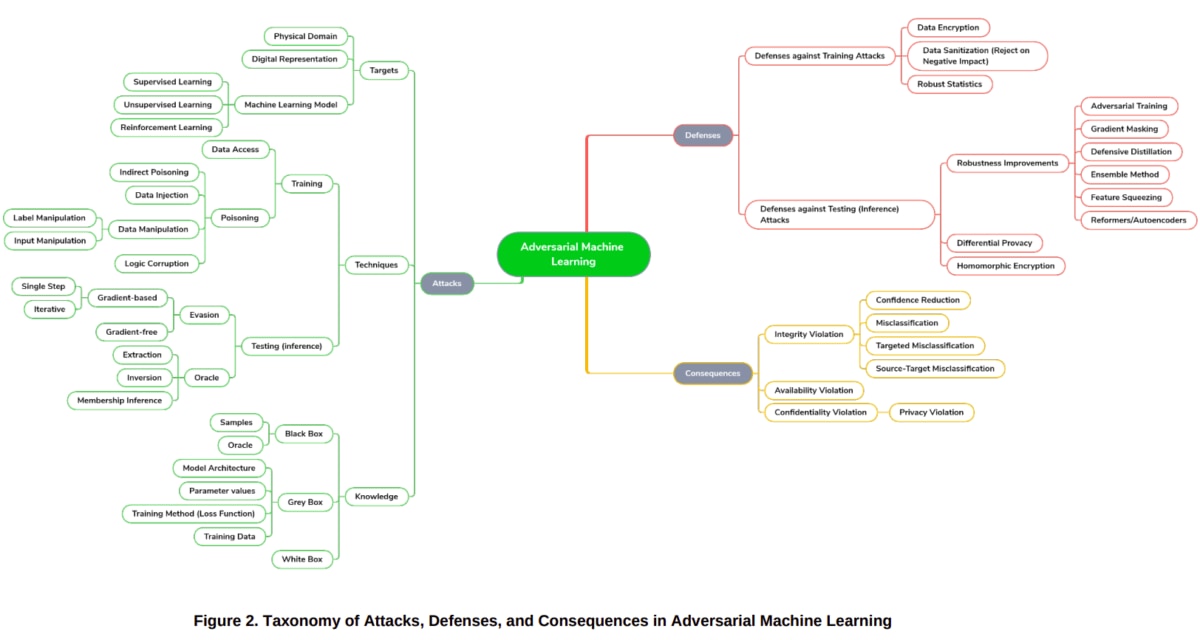

“Draft NISTIR 8269: A Taxonomy and Terminology of Adversarial Machine Learning” is a draft of AI security best practices being developed by the US National Institute of Standards and Technology (NIST). For the purpose of ensuring the safety of AI, we categorize AI-related security from the three perspectives of “attack,” “defense,” and “impact,” and define terms.

NISTIR8269 is a draft version at the time of writing this Lacombe (February 2021), but we plan to reflect it in “NIST SP800-30: Guide for Conducting Risk Assessments”. It is said that there is. Perhaps the contents of NISTIR8269 will become best practices for AI security in the future.

In addition, the AI Defense Institute has published a Japanese commentary blog for this draft, so please read it if you are interested.

AI VILLAGE

AI VILLAGE is a hacker community that researches the utilization, attack and defense of AI .

Since its birth as a workshop of ” DEFCON ” , the world’s largest hacker conference on cyber security, we have planned and managed various events related to AI security, and have been working with researchers (including students) and engineers from companies and universities. Many members of various occupations such as , sales, and managers participate.

We usually exchange opinions on Discord, not only about attack methods and countermeasures against AI, but also about a wide range of topics such as papers (related to AI), mathematical topics, AI ethics and work introductions. . You can join the AI VILLAGE Discord from this link , so if you are interested, please join and interact with other members.

The five sources of information introduced here are very useful in understanding attack methods and countermeasures against AI.

We believe that the emergence of security awareness among those involved in the development and use of AI will greatly contribute to ensuring the safety of AI through secure design, programming, and procurement, so we recommend that you read through these sources of information.

Incorporate security testing into the development process

It may be difficult to prevent 100% of vulnerabilities from being built in, even if you try to practice guidelines, secure design, programming, procurement, etc. Therefore, it is desirable to conduct security tests, detect vulnerabilities in advance, and fix them before releasing AI as a product or service .

By releasing AI after undergoing security testing, we can expect to reduce the probability that AI will be damaged by cyberattacks.

In this section, we introduce two tools that support security testing for AI.

Adversarial Robustness Toolbox

Adversarial Robustness Toolbox (ART) is a Python library for AI security .

AI developers can use ART to test attack techniques (adversarial samples, data pollution, membership inference, etc.) that have been discussed in this series so far, as well as defense techniques against them.

As Python libraries for AI security, Cleverhans , Foolbox , AdvBox , etc. are known, but these libraries “only support some attack methods (such as hostile samples)”, It was difficult to use for security testing due to reasons such as “no support for countermeasures”, “stagnant development”, and “poor documentation”.

On the other hand, since ART was announced at the world’s largest hacker conference ” black hat ” in 2018 , many contributors have frequently added functions. In addition, since it has extensive documentation and tutorials, developers who can handle Python can easily use it.

In addition, the AI Defense Institute has a hands-on explanation of the basic usage of ART titled ” Adversarial Robustness Toolbox (ART) Super Introduction “, so please read it if you are interested.

Adversarial Threat Detector

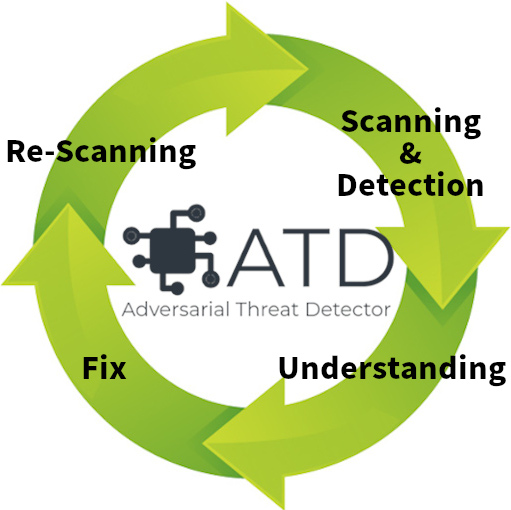

ART is a very useful AI security Python library, but it requires programming to use it. Therefore, the authors are developing a vulnerability scanner called “Adversarial Threat Detector” (ATD) with the aim of “fully automatic detection of AI vulnerabilities”.

By rotating the following four cycles, ATD aims not only to detect AI vulnerabilities, but also to deepen developers’ understanding of vulnerabilities and countermeasures.

- Scanning & Detection

- Understand developer vulnerabilities

- Vulnerability fixes (Fix)

- Re-Scanning

In addition, ATD is proceeding with development in accordance with the “Adversarial Threat Matrix” mentioned above. In addition, we currently use the “Adversarial Robustness Toolbox (ART)” for vulnerability detection and defense.

Currently, ATD has very limited functions that can be called MVP (Minimum Viable Product), but we will continue to add functions at a pace of about once a month, so if you are interested. We encourage you to check back regularly for release information.

summary

In this column, we have introduced guidelines and test tools that we believe will be useful in ensuring the safety of AI.

Algorithms, frameworks, and other AI-related technologies are advancing rapidly, and along with that, attack methods against AI are also evolving (deepening). There is no guarantee that today’s security will be tomorrow. Therefore, it is necessary to always catch up with the latest information and keep updating knowledge.

Currently, cyberattacks against AI are not active, but according to Gartner’s report ” A Gartner Special Report: Top 10 Strategic Technology Trends for 2020 “, “Regarding cyberattacks, AI such as learning data pollution and adversarial samples By 2022, 30% of all attacks targeting

In a 2019 interview article , F-Secure’s Hypponen, a well-known cybersecurity expert, said , “At present, people with sufficient knowledge and experience in machine learning are not likely to engage in criminal activities. You don’t have to dye your hair and you can easily find a job that pays well.”

On the other hand, in two to three years (around 2022, calculated from the publication of the article), “machine learning will become easier to handle, and everyone will be able to use machine learning to carry out cyber attacks.” ” also said. This comment is an answer to the question, “Will there come a time when attackers use machine learning to conduct cyberattacks?” Although the nuance is different, I think the same can be said for attacks on AI.

Therefore, before the arrival of an era in which attacks on AI are rampant, it is important to incorporate security mechanisms into AI development and operation, and prepare to prevent attackers from falling behind . they think.

With this column, ” Introduction to AI Security ,” which has been serialized in eight parts, will come to an end, but our efforts in AI security will continue. If you have the chance to meet us in any way, please feel free to ask us anything about AI security. We would like to do our best to help ensure the safety of your AI.

Finally, ChillStack Co., Ltd. and Mitsui & Co. Secure Directions Co., Ltd. provide training for the safe development, provision, and use of AI .

In this training, in addition to the attack methods explained in this column, you can understand various attack methods and countermeasures against AI through lectures and hands-on.