Foreword

Embeddings are one of the most versatile techniques in machine learning, and an important tool that every machine learning engineer should have in their toolbelt. But it’s a shame that so few people understand what embedding is and what it’s good for!

The problem may be that the embedding sounds somewhat abstract and arcane.

In machine learning, embedding is a method of representing data as points in an n-dimensional space and clustering similar data points together.

Do you find the above definitions boring and unimpressive? Please don’t be deceived. Understanding this machine learning multi-tool will allow you to build everything from search engines to recommendation systems to chatbots. And you don’t have to be a data scientist with ML expertise to use it, and you don’t need a huge labeled dataset.

Now, do you understand how great software like this is?🤞

Are you satisfied with the story so far? Now let’s dive deeper into embedding. This post explores:

- What is embedding

- What are they used for?

- Where and how to find open source embedding models

- How to use the open source embedded model

- How to build your own embed

What can you do with embedding?

Before we talk about what embeddings are, let’s take a quick look at what you can do with embeddings (very exciting). Vector embedding is powerful.

- Recommendation systems (“If you like this movie, you will also like this” kind of thing you see on Netflix).

- Search of any kind

- Text search (e.g. Google search)

- Image search (such as Google image search)

- Music search (“What is this song?”)

- Chatbots and question answering systems

- Data preprocessing (preparing data for input into machine learning models)

- One-shot/zero-shot learning (such as machine learning models that learn from almost no training data)

- Fraud detection/anomaly detection

- Keystroke detection and “fuzzy matching”

- Detecting obsolescence (drift) in ML models

- And many more!

Even if you’re not trying to do anything on this list, embedding has a wide range of applications, so it’s worth reading on just in case. Do not you think so?

What is embedding?

Embedding is a way of representing almost any kind of data, such as text, images, movies, users, music, etc., as points whose positions in space have semantic content.

The best way to intuitively understand what this means is with an example. So let’s take a look at one of the most popular embedding techniques, Word2Vec.

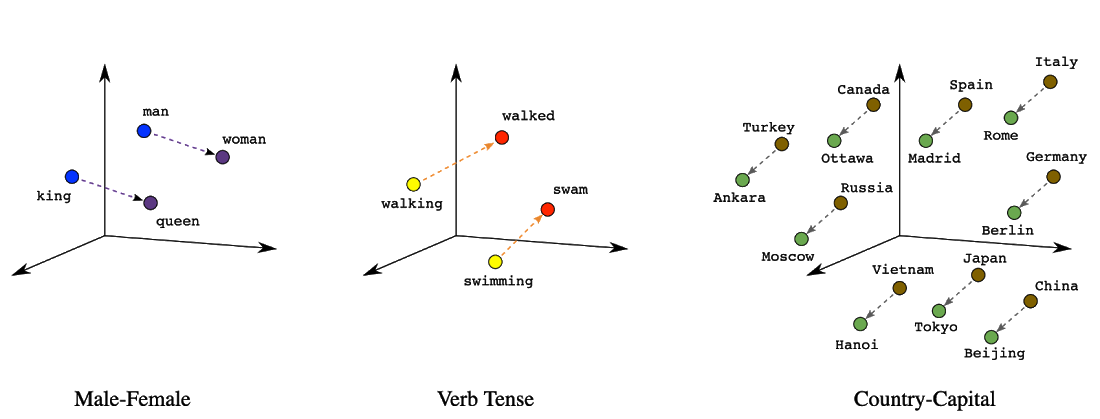

Word2Vec (short for word to vector) is a technology for embedding words invented by Google in 2013. It takes a word as input and spits out n-dimensional coordinates (or “vectors”), so if you plot the vector coordinates of the word in space, you’ll get synonyms. A visual of such an output is below.

Words plotted in three-dimensional space. Embeddings have hundreds to thousands of dimensions, and such ultra-high dimensions are too many for humans to visualize.

In Word2Vec, similar words are clustered spatially, so the vectors/points representing “King”, “Queen” and “Prince” are all clustered together. The same is true for synonyms (“walked”, “strolled”, “jogged”).

The same is true for other data types. Song embedding plots similar-sounding songs nearby. Image embedding plots similar looking images close together. Customer embedding plots customers with similar buying habits nearby.

You can already see the usefulness of embeddings: they allow us to find similar data points. For example, you could create a function that, given a word (say, “King”), would find the 10 closest synonyms for it. Such a function is called a nearest neighbor search. But just one word is not interesting. So what if we were to embed the entire plot of the movie? Then, given the synopsis of a certain movie, you can have a function that will give you 10 similar movies. Alternatively, if there is one news article, it recommends multiple articles that are semantically similar.

In addition, embeddings allow numerical computation of similarity scores between data points: “How similar is this article to that article?” One way to do this is to calculate the distance between two embedding points in space, and consider the closer the more similar. This measure is also called the Euclidean distance. (Dot products, cosine distances, and other trigonometric measures can also be used).

Similarity scores are useful for applications such as duplicate detection and face recognition. For example, when performing face recognition, a photograph of a person’s face is embedded, and if the degree of similarity between two photographs is high, it can be determined that the person is the same person. Also, if you embed all the photos taken with your phone’s camera and find photos that are very close in the embedding space, you can conclude that they are highly duplicated photos due to the proximity of the embedded data points.

Similarity scores can also be used to correct typos. In Word2Vec, common misspellings like “hello”, “helo”, “helllo” and “hEeeeelO” tend to have high similarity scores because they are all used in the same context.

The graph above also illustrates Word2Vec’s ability to express grammatical meanings such as gender and verb tenses on different axes, which is a very nice property. That is, by adding and subtracting vectors of words, we can resolve analogies such as “man to woman, king to XX”. Computing similarities is a very good feature of word vectors, but this feature does not always translate in a useful way for embedding more complex data types such as images or long chunks of text. (more on that later).

What kind of things can be embedded?

Text

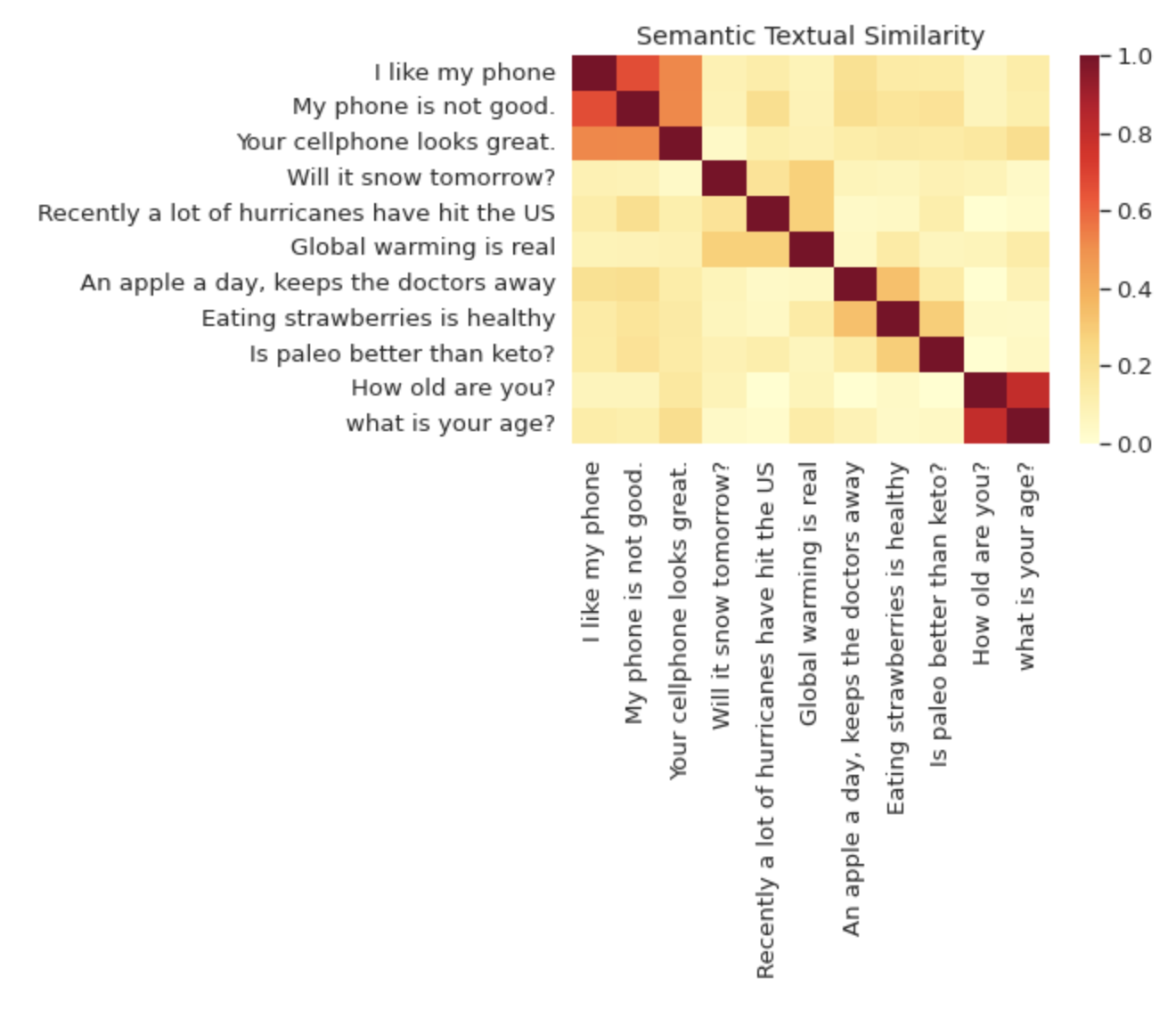

Not only individual words (as in Word2Vec), but entire sentences and text chunks. One of the most popular open source embedding models is the Universal Sentence Encoder (USE). The name is a bit misleading, as USE can be used to encode whole text chunks, not just sentences. Check out the visuals posted on the TensorFlow website below. A heatmap shows how similar different sentences are by distance in the embedding space.

The Universal Sentence Encoder model has many uses, especially for text retrieval. The reason is that USE embeddings capture the meaning of sentences rather than overfitting individual words.

For example, imagine I, the author of this article, creating a searchable database of news articles.

Dale Times news article database

|

Now, let’s search this database with the text query “food”. The most relevant result in the database is an article about the infamous burrito/taco controversy, despite the lack of the word “food” in the article headline. USE captures the semantic similarity of the text, not the duplication of specific words, so you can get results like this if you search for the USE embeddings of the headlines rather than the raw text itself.

It’s worth noting that many data types, such as image captions and movie subtitles, can be associated with text, so the technique can be applied to multimedia text retrieval. For example, try it with a searchable video archive.

Browse: How to do text similarity search and document clustering in BigQuery

image

Embedding images also enables reverse image search, or “search by image.” One example is Vison Product Search, which is also Google Cloud’s product of the same name.

For example, imagine a clothing store wants to create a search function. You might want to support text queries like “leather, goth, studs, miniskirts”. With something like USE embedding, it might be possible to match the textual user query with the product description. But wouldn’t it be nicer if you could also search for images instead of text? For example, could shoppers upload trending tops from Instagram and see them matched with similar products in stock? .

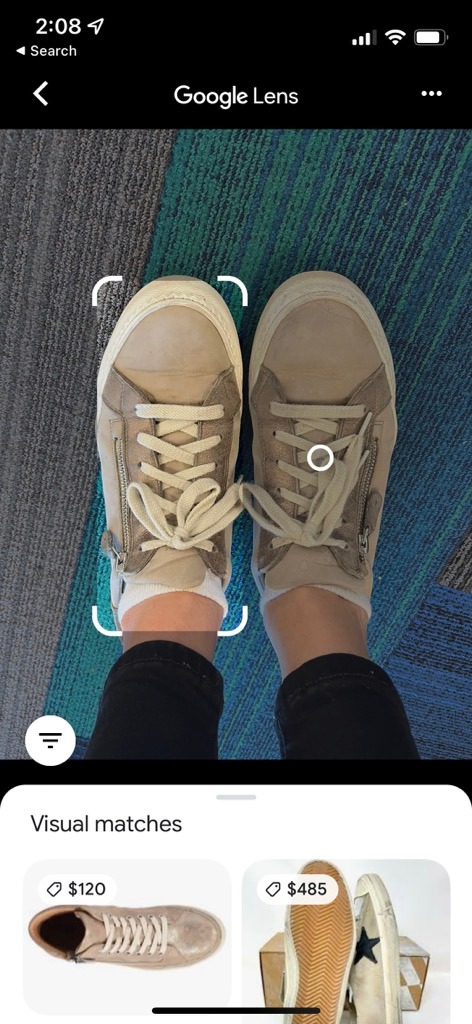

One of my favorite products used in image search is Google Lens. It matches the camera picture with visually similar products. In the image below, I am trying to match online products that are similar to my sneakers.

As with text embedding, there are many freely available models for image embedding. The TensorFlow Hub page offers a number of image embedding models labeled “feature vector” . These embeddings were originally extracted from large-scale deep learning models trained to perform image classification on large datasets. If you look at the MobileNet embedded image search demo, you can upload a photo and see how it searches all of Wikimedia to find similar images.

Unfortunately, unlike sentence embeddings, open source image embeddings often need to be tuned for specific tasks in order to be of high quality. For example, if you want to build a clothes similarity search, you will likely need a clothes dataset to train the embedding. (We’ll talk a bit more about how embeddings are learned later.)

Browse: Compressing, Searching, Interpolating, and Clustering Images with Machine Learning

Products and shoppers

Embedding is especially useful in retail for product recommendations. How does Spotify know which songs to recommend based on a listener’s listening history ? How does Netflix decide which movies to recommend? How does Amazon know which items to recommend to shoppers from their purchase history?

In addition, the Netflix Technology Blog posted on Medium on December 11, 2020, ” Machine Learning Supports Content Decision Makers”, when producing a Netflix original work, asked, “Have there been similar works in the past?” It explains how to derive the judgment of “how many viewers can be expected in each region” based on embedding .

Embedding is currently the way to build state-of-the-art recommendation systems. Using historical purchase and viewing data, retailers are training models that embed users and items.

What does it mean to build a recommendation system with an embedded model?

For example, let’s say I’m a frequent shopper at a fictional high-tech bookstore called “BookShop.” BookShop used historical purchase data to train two embedding models.

The first user embedding model maps me, the book purchaser, into user space based on my purchase history. For example, I often buy O’Reilly technical books, pop science, fantasy, etc., so this model maps me closer to other geeks in user space.

BookShop also maintains an item embedding model that maps books into the item space. The item space is expected to contain books of similar genres and topics. Thus, since Philip K. Dick’s Do Androids Dream of Electric Sheep and William Gibson’s Neuromancer are similar in topic and style, the vector representing the two books is considered to be in the vicinity.

How are embeds created and where can I get them?

The story up to this point can be summarized as follows.

- What Kinds of Apps Show the Power of Embeds

- What is embedding (mapping data as points in space)

- Some of the data types that can actually be embedded

What we haven’t covered yet is where the embeddings come from. (When data scientists and SQL databases love each other…) Well, more specifically, how to build a machine learning model that ingests data and spews out embeddings with semantic content based on use cases. let’s talk about

As with most machine learning, there are two options for building embedded models: the pretrained model route and the DIY, or train your own model route.

Pretrained model

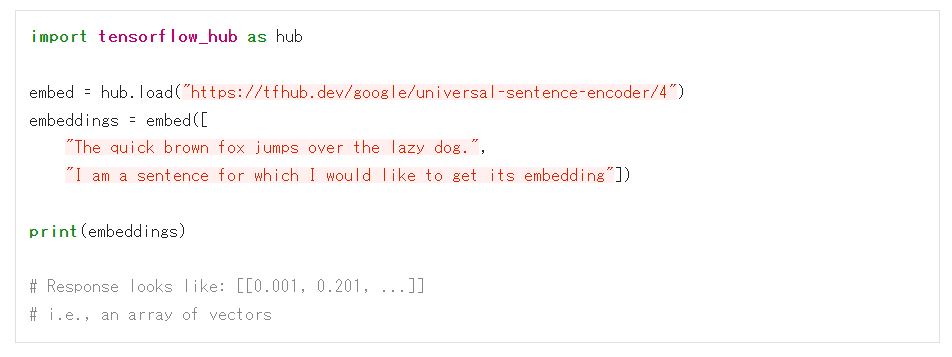

If you want to embed text, i.e. do text search or text similarity search, you’re in luck. There are tons of pretrained text embedding models, and they are free and easy to use. One of the most popular models is the aforementioned Universal Sentence Encoder model, available for download from the TensorFlow Hub model repository here . Using this model in code is very easy. The Python example below is taken directly from the TensorFlow website.

To make practical use of this text embedding, we need to implement nearest neighbor search and similarity calculation. See this blog post I recently wrote about this. That article details an intelligent app that uses sentence embeddings to construct text semantically.

Open source image embeds are also readily available. It can be found on TensorFlow Hub . Again, it is often useful to fine-tune this kind of embedding with domain-specific data (clothing pictures, dog breeds, etc.) to help with domain-specific tasks.

Finally, I forgot to mention the most trend-setting embedded models released recently, so here we go. That is OpenAI’s CLIP model . CLIP can take images and text as input and map both data types to the same embedding space. With these mechanisms, you can build software to determine which caption (text) is the most appropriate for an image.

Train your own embeddings

Beyond general text and image embeddings, we often have to train embedding models on our data. One of the most popular ways to build your own embedded model today is called the Two-Tower model. Below is the Google Cloud site description of this model.

The Two-Tower model trains the embedding using labeled data. The Two-Tower model pairs similar vector objects (user profiles, search queries, web documents, answers, images, etc.) into the same vector space to make related items similar to each other. The Two-Tower model consists of two encoder towers, query and candidate. These towers embed individual items into a shared embedding space where the matching engine can retrieve similar items.

This article does not intend to go into detail about how the Two-Tower model is trained. For that, see Google Cloud’s guide to Training embeddings using the Two-Tower built-in algorithm, or this Tensorflow Recommenders page that shows you how to train TensorFlow’s Two-Tower/Recommendation model yourself. I want to get it.

Anyway, that’s all for now. Next time, I will talk about how to create an application based on embedded technology. As always, thank you for reading.